Jason Fletcher

VJ Loop Artist

*VJ Loops

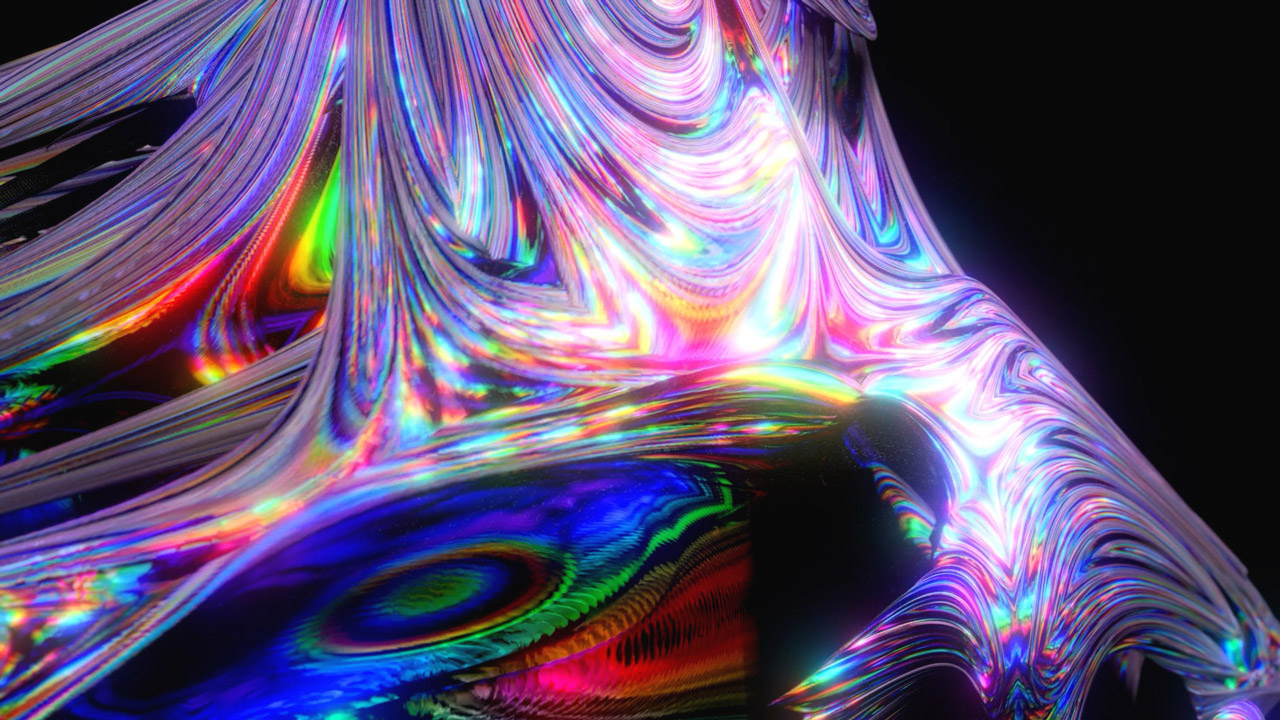

Concert Visuals by ISOSCELES

Welcome to my laboratory. I frequently experiment with 3D animation, machine learning, and compositing. I'm also one of the developers of NestDrop. Every month I release a new VJ pack on Patreon.